You’d be forgiven for not recognizing the term “testability,” despite its central importance to the W3C’s new Web Content Accessibility Guidelines (WCAG 2.0). There’s little mention of testability in WCAG 2.0 documents—and given the verbosity of the guidelines, the absence of information about testability seems almost purposeful. Indeed, testability is one of WCAG 2.0’s big secrets: while most of the public complaints about WCAG 2.0 have been about technology neutrality, jargon, and the lack of attention to people with cognitive disabilities, the underlying cause behind these issues—testability—has taken a back seat.

So what is testability, and why does it matter? Before we can answer that, we need to go back to the beginning.

The World Wide Web Consortium (W3C) formed the Web Content Accessibility Guidelines Working Group in late 1998. In May 1999, the Working Group released a set of accessibility development and content guidelines, called the Web Content Accessibility Guidelines (WCAG 1.0). Almost immediately, the Working Group began working on the second version of the guidelines, WCAG 2.0. I joined the Working Group in May of 2000 as an Invited Expert and was active in the Working Group, with two notable absences, until August of 2006. Based on my experience as a member of the Working Group—and of the larger accessibility community—I believe that many of the problems associated with WCAG 2.0 can be attributed to testability.

So once again, what is testability, exactly? Although testability is mentioned in the abstract of the recent WCAG 2.0 working draft documents and expanded in the “Conformance” section, a full definition sits not in the glossary but in the Requirements for WCAG 2.0 Checklists and Techniques, dated 7 February, 2003. Within this document, you will find the only definition of testability as it applies to WCAG 2.0. Here’s that definition:

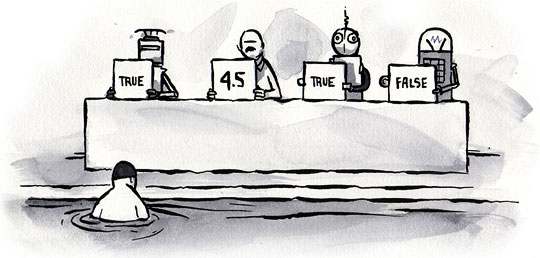

Definition: Testable: Either Machine Testable or Reliably Human Testable.

Definition: Machine Testable: There is a known algorithm (regardless of whether that algorithm is known to be implemented in tools) that will determine, with complete reliability, whether the technique has been implemented or not. Probabilistic algorithms are not sufficient.

Definition: Reliably Human Testable: The technique can be tested by human inspection and it is believed that at least 80% of knowledgeable human evaluators would agree on the conclusion. The use of probabilistic machine algorithms may facilitate the human testing process but this does not make it machine testable.

Definition: Not Reliably Testable: The technique is subject to human inspection but it is not believed that at least 80% of knowledgeable human evaluators would agree on the conclusion.

In simpler terms, the Web Content Accessibility Guidelines Working Group defines a testable success criterion as one that is:

- machine-testable or

- “reliably human testable”—which means that eight out of ten human testers must agree on whether the site passes or fails each success criterion.

Testability first entered the scene in 2000 as a response to criticism directed at WCAG 1.0—specifically, that some of the guidelines were being ignored because they were too broad or vague. One example is the WCAG 1.0 checkpoint, “Use clear and simple language.” The general consensus was that this checkpoint was open to interpretation and people didn’t know how to comply. Cue testability.

At first glance, testability seems not only reasonable but integral to the development of a successful WCAG 2.0—how else will developers know they have complied with an accessibility requirement? The WCAG Samurai Errata are but one example of a set of guidelines that don’t rely on testability but do give developers clear instructions on how to comply with relevant requirements. And after all, the Working Group was created to write success criteria that assist people with disabilities. Success criteria that are integral to helping people with disabilities use the web are being outlawed due to WCAG 2.0’s testability requirement; their definition as otherwise valid success criterion is not at issue. Once an insistence on testability begins outlawing otherwise useful success criteria, it needs to be reconsidered.

We lose too much#section2

The problem with testability is that even the most reasonable of success criteria can be non-testable—and if a success criterion is not considered testable, it isn’t included in WCAG 2.0. Whether the criterion is an otherwise useful technique that improves accessibility is now irrelevant to whether it gets included in WCAG 2.0. Due to the testability requirement, many useful success criteria have been removed from WCAG 2.0, and others watered down.

For example, it has been argued that the accessibility specialist’s old faithful, alt attributes for images, fails the testability requirement—and the tangled logic required to make them seem testable has made the guideline weaker. I lodged the following comment on the Last Call Working Draft on Guideline 1.1.1, which reads:

For all non-text content that is used to convey information, text alternatives identify the non-text content and convey the same information. (Emphasis added).

In my comment, I argued that a machine can never test whether an alt attribute conveys the same information as an image, and that eight out of ten human testers could not agree whether the text conveys the same information. I gave the following example:

…in the Live in Victoria site (www.liveinvictoria.vic.gov.au) there is an image under the heading “Business Migrants”. When I worked on this site, several people said this image should have a null ALT attribute as it conveyed no information. Several other people suggested ALT attributes of “A couple of business migrants chatting at work” or “Guys chatting at work”.

Whereas the ALT attribute that I recommended was “There is a wealth of opportunities for Business Migrants in Victoria”.

Although I received a roundabout response from the Working Group on my comment1, their public online comment tracker dated 12 January, 2007 proves more insightful:

With regard to 1.1 the success criteria do not require that ALT text provided by different people be the same. In fact the sufficient techniques only require that ALT text be present that can be construed to be ALT text. The requirment [sic] is for alt text to be present. Since the quality of the alt text can not be measured, there is no specific criterion for quality. (Emphasis added.)

It looks like insistence on testability has brought us back to the good old days of alt=“image”, except that we have no guidelines to point to when we tell developers that the description is wrong. To be fair, the Working Group has tried to get around this particular problem by adding a few clauses to Guideline 1.1.1, for example to allow ornamental images to have null ALT attributes. However this particular clause seems inherently untestable.

…if non-text content is pure decoration, or used only for visual formatting, or if it is not presented to users, then it is implemented such that it can be ignored by assistive technology.

What kind of assistive technology? What versions? Ignored as a default, or only if the user chooses to ignore it?

There are many instances in WCAG2 where success criteria are actually not testable—and the Working Group knows it. In Bugzilla, the Working Group’s issue tracking system, there is a tracked issue lodged by three Working Group members that reads: “In particular, the current wording [of WCAG2] does not seem testable. Words such as, “key,” “consistent,” “predictable,” “inconsistent,” and “unpredictable” are subjective.” Yet these terms have been used throughout WCAG2—there’s even an entire guideline that rests one of these subjective, non-testable terms:

Make Web pages appear and operate in predictable ways.

Where possible, the Working Group has tried to narrowly define success criteria to make them testable: success criterion 1.1.1, with four sub-sections, is equivalent to WCAG1 Checkpoint 1.1: “Provide a text equivalent for every non-text element.” But this just highlights another problem with testability—it increases the complexity of the success criteria. Because WCAG2 is technology-neutral, the guidelines have to be testable in a technology-neutral way, a situation that produces lengthy and jargon-heavy guidelines. In contrast, the WCAG Samurai Errata are an example of the type of guidelines that can be developed without the constraint of testability (and technology neutrality).

Cognitive disabilities neglected#section3

One criticism of the first version of WCAG was that most of the cognitive-disability–related checkpoints were relegated to Level AAA, a level rarely attempted. Only one checkpoint dedicated to the needs of people with cognitive disabilities was in the minimum level (Checkpoint 14.1: “Ensure language is clear and simple”). However, with the introduction of testability, this checkpoint was removed from WCAG2 in April 2004. It was this checkpoint that initially piqued my interest in testability and when it became clear that this checkpoint was being removed—not because it wasn’t a valid checkpoint, but because it simply wasn’t testable—I proposed the removal of testability. As a member (in good standing) of the W3C Web Content Accessibility Guidelines, on April 22, 2004, via teleconference, I argued that:

if you lock out guidelines [because] we can not define them in a testable manner, then we run the risk of locking out guidelines that people find useful and that increase the accessibility of content … [non-testable guidelines] should not be relegated to highest level (3) because we can not define them in a certain way … they should be defined in way that is most assistive to people with disabilities.

—http://www.w3.org/2004/04/22-wai-wcag-irc.html

At that point, the fate of testability—which wasn’t yet applied to all success criteria—was put to vote, and many people voted against its removal. During the same teleconference, the Working Group held another vote to decide whether testability should be a required characteristic of all success criteria, and I was the only person on the Working Group who voted against this change. A week later, my status as a member (in good standing) was revoked due to “non-participation.” At the next teleconference, the inclusion of testability was passed unanimously.

Many other techniques to assist people with cognitive disabilities, from error prevention to summary information, have also been deleted from WCAG 2.0 or moved to Level AAA or to the advisory techniques. In fact, as WCAG 2.0 does so little to assist people with cognitive disabilities that a formal objection was lodged (co-signed by myself) and a taskforce created to discuss the matter. Unfortunately the Working Group’s main response to the formal objection is to preface WCAG 2.0 with a statement that declares the guidelines not sufficient to assist people with cognitive disabilities. It is troubling that a set of guidelines aimed at assisting people with disabilities should entirely neglect the large number of web users with cognitive disabilities.

Still, it’s not really a surprise. The Working Group minutes are littered with various comments warning against using testability for the entirety of WCAG 2.0—something it was never originally intended for. Even the group’s chair, Gregg Vanderheiden, said “if we require a test for every checkpoint, life will be difficult in the realm of cognitive accessibility.” And that’s precisely what has come to pass.

Calling for an end to testability#section4

There are many reasons why testability was introduced and remains a tenet of WCAG 2.0. Some of these reasons may be valid and important—the W3C is seeking ISO certification, the WAI want WCAG 2.0 enshrined in law—but none should be allowed to draw attention away from the core goal of directly improving the ability of people with disabilities to access websites.

When success criteria are removed because they are not testable—even if they are otherwise valid and useful success criteria—the Working Group has lost its way, and we need to guide them back to the right path. The Working Group has made significant changes to WCAG 2.0 after the Last Call Working Draft; among other things, one of the most contentious issues, baseline, has been significantly modified. The Working Group needs to go one step further and remove testability, lest they risk alienating both developers and accessibility specialists. With the publication of the WCAG Samurai Errata, the web community finally has a choice—and if WCAG 2.0 continues to be unworkable, developers will simply turn to another set of guidelines.

The Working Group has asked for comments on their latest WCAG 2.0 Working Draft by June 29, 2007. Now is the time to call for the removal of testability.

Complicated stuff, but it sounds like you know what you’re talking about and have my backing!

Hi Fahed

Thanks – the best way to back me is to lodge a comment against testability!

I’ve said it for a while now, over and over. The various working groups at the W3C just don’t get it at all. Are people forgetting that in some cases people are volunteering their time and effort on these working groups. But then (as we have seen) people on the groups that don’t like the outcome of the discussion, and like a political roundtable, just remove the troublemakers. It is these troublemakers that are often the only thing keeping the working group in check.

I did wonder about the testability. It did seem a little inconsistent. But Gian you have explained in detail and I now understand why. It sounds strange to remove this. But testing that doesn’t have a yes or no result is basically a waste of time.

Let me clarify this. (before Gian flames me) What we should be doing is removing all testability from the equation. Maybe this needs to be documented elsewhere with examples. So the enforcement and demonstration of the guideline are not in the guideline.

Also why remove “cognitive disabilities” because its too hard. This I’m still trying to understand. Working Group please explain!

Gian,

I think you’ve made a good case for this. In general, I’ve been very happy with this draft of WCAG 2.0, and I think it’s helpful for developers if checkpoints are testable, but not at the expense of not being able to help those with cognitive disabilities.

What about something that says checkpoints (oh, all right, “success crtieria”) must be testable wherever possible? That way success criteria relating to cognitive disabilities etc can be included but testability is still brought into the equation wherever it can be?

Excellent article, it’s rare to find people talk intelligently about WCAG/Accessibility. However, I’m afraid I’d go significantly further. The story you tell of your dealings with the group dovetails with other experts’ experience: that the group has long since stopped being about accessibility, and is now essentially meaningless.

Having worked for a firm that took accessibility _standards_ extremely seriously, I’ve seen the damage that can be done by incomplete comprehension of accesibility guidelines and slavish following of the same. I think it’s highly unlikely WCAG 2 will improve actual usability for anyone, but the damagae it is likely to cause externally is huge.

WCAG 2 is incomprehensible in the extreme, prescriptive in the the wrong ways and permissive in the wrong ways. I understand that testability is the cause of a lot of this, but so is the dynamic of the working group. The absence of any actual research into the behaviours of impaired users renders the whole endeavour bizarre. (a criticism that could also be levelled at WCAG 1)

In short, it’s time to stop taking WCAG 2 even vaguely seriously and work on a competing standard. It seems the number of accessibility experts with serious reservations about WCAG 2 exceeds the number in the working group. I know it’s a significant endeavour, but it’s the only way a number of significant standards advances have occurred. Look at SOAP…

A well stated case, hopefully one that will be listened to!

Standards, guidelines, definitions; they’re all there to help us do our jobs, when they stop helping and start hindering they loose all relevance.

Accessibility, like other user-focused disciplines, relies on a thorough understanding of the needs of the end user, and guidelines can’t replace that, no matter how testable they are…

Maybe I’m missing the obvious, but I’m a tad confused about how the WCAG + Samurai are any better.

How are things like “do not set one confusable colour on top of another”, followed by just one example of “red type on green or black backround”, any clearer than what WCAG, for instance, is doing by saying “assistive technology” without specifying which one?

And the almost anti-testability statement in the Samurai’s bit about HTML semantics “Other authors’ disagreement with your choices is not relevant to these errata” … how does that help in checking whether a site actually complies with the errata or not?

Lastly, if even the Samurai clearly states (just like WCAG 2.0) that it “cannot be a claim of full accessibility to people with cognitive disabilities”, how is that “finally a choice”, if one of the primary concerns was indeed how users with cognitive disabilities are being disenfranchised by WCAG 2.0?

Anyway, I’ve gathered these thoughts in a quick post on Accessify – “Gian Sampson-Wild on WCAG 2.0’s concept of testability”:http://accessify.com/news/2007/06/gian-sampson-wild-on-wcag-20s-concept-of-testability/

Hi Patrick

I can’t really answer this question until the final version of the WCAG Samurai Errata are released. There are certainly problems with them – many of which I outlined in my “technical review”:http://samuraireview.wordpress.com/technical-review/. Some areas are confusing, however that is in contrast to WCAG2 where I would say *most* areas are confusing – for instance the WCAG Samurai Errata are ten pages, whereas WCAG2 is close to the 500 page mark.

You are correct that the WCAG Samurai Errata do not address issues faced by people with cognitive disabilities but I am hoping this changes.

Good article, Gian. Yet more proof that the brains behind WCAG2 aren’t the best that money can buy.

I mean, c’mon! Every accessibility guide since the year dot has said “don’t rely on automated tests”, because we’ve known all along that there are some things that you just can’t automatically test for.

The bit about asking ten people and seeing if eight of them agree – that’s like saying “it’s raining today, it’s proof that the climate is becoming wetter”. How do you select your ten people? How do you know they represent a fair and unbiased sample group? How do you know that the next ten people won’t result in eight of those ten giving the opposite viewpoint? Do we need to sample ten groups of ten, to make sure that 8 out of 10 give the same answer eight times out of ten? But then we might have as little as 64% agreement – so that would no longer be sufficient.

You don’t need to be a degree-level statistician to see just how fatuous the “human-testable” guideline is.

A lot of accessibility guidelines _can_ be machine-tested. But there are also a lot that can’t. Colour combinations may not always be testable, if you’re looking at the effects of juxtaposition of colours. Kincaid reading scores will tell you if you’re using too many words like “juxtaposition”, but there’s a *lot* more to “use the simplest language that is appropriate” than that. Relevant alt text, consistent layout, logical grouping of elements (esp form elements), even semantic code – these are all, to a greater or lesser extent, subjective. They can’t reliably be tested, but it is still vital that all authors do _as much as they can_ to ensure that these guidelines are met.

Patrick raises some good points in his post on Accessify about this article. I tried to comment but seeing as I got an error I thought I would add the comments here instead.

*Guideline 1.1.1*

Patrick says: “I don’t quite follow why the form (All non-text content has a text alternative that presents equivalent information, except for the situations listed below) that’s now in the latest working draft is supposed to be bad.”

It’s actually the four sub-sections that are the problem – which didn’t make it into the article because it made the page too long! These four sub-sections are:

1. Controls-Input: If non-text content is a control or accepts user input, then it has a name that describes its purpose. (See also Guideline 4.1.)

2. Media, Test, Sensory: If non-text content is multimedia , live audio-only or live video-only content, a test or exercise that must be presented in non-text format , or primarily intended to create a specific sensory experience , then text alternatives at least identify the non-text content with a descriptive text label. (For multimedia, see also Guideline 1.2.)

3. CAPTCHA: If the purpose of non-text content is to confirm that content is being accessed by a person rather than a computer, then text alternatives that identify and describe the purpose of the non-text content are provided and alternative forms in different modalities are provided to accommodate different disabilities.

4. Decoration, Formatting, Invisible: If non-text content is pure decoration, or used only for visual formatting, or if it is not presented to users, then it is implemented such that it can be ignored by assistive technology.

*Subjective words*

With regards to your comment on the use of subjective words such as “inconsistent”, “predictable” etc in WCAG2 – it really comes down to the definition of “testability”. In my time on the Working Group it appeared to me that testability was applied quite strictly to certain checkpoints – such as the clear and simple language checkpoint – whereas it was more lax on other checkpoints – such as alt attributes. One could argue that eight out of ten people couldn’t agree on whether the result from activating a search is “predictable” – will it provide ten search results? twenty? Will it include summary information? etc. If we are going to allow subjective terms like “predictable” then what is the problem with other subjective terms like “clear” and “simple”?

*The WCAG Samurai Errata*

Another area of confusion with WCAG2 (once again due to testability) is that it increases the complexity of the success criteria. Because WCAG2 is technology-neutral, the guidelines have to be testable in a technology-neutral way. Therefore a simple checkpoint in WCAG1 like “Title each frame to facilitate frame identification and navigation” becomes “The destination of each programmatic reference to another delivery unit is identified through words or phrases that either occur in text or can be programmatically determined.” It’s not until you drill down to the techniques that it becomes clear that this success criterion is actually about frames at all. The WCAG Samurai Errata is certainly clearer when it comes to frames (it says not to use them!)

In my conclusion I wasn’t trying to say that the WCAG Samurai Errata are a better set of guidelines – I was attempting to say that if there are easier to use guidelines available then developers will turn to them, instead of WCAG2. I see that being a real problem in the accessibility community – developers choosing one set of guidelines while Government and management choose another.

*Cognitive disabilities*

I am really hoping that the WCAG Samurai address issues faced by people with cognitive disabilities. I see it as a way to really set the two guidelines apart. I believe the Working Group is wrong to say there isn’t much research in the area- a set of guidelines can be developed.

*In conclusion*

When it comes to testability I think it was a noble aim. I think it would be fantastic if every accessibility requirement could be defined in a testable way. However, for those requirements that can’t (eg. clear and simple language), the requirement should not be excluded from WCAG2. At the end of the day, if it helps people with disabilities use the web then it should be included in the accessibility guidelines – whether they be WCAG2 or the WCAG Samurai Errata.

bq. for instance the WCAG Samurai Errata are ten pages, whereas WCAG2 is close to the 500 page mark

that’s not really comparing like for like, though. the normative part of WCAG 2, minus the appendices, is just over 10 pages as well. you could say that, to understand WCAG 2, you need all the other material and techniques relating to each SC, but that is just as true for the Samurai – only that there is no techniques document for Samurai yet, so you have to track down the related best practices etc yourself.

also, Samurai only applies to HTML/CSS, with added bits specific to PDF and some things that could reasonably be applied, at least in principle, to other formats like Flash…while WCAG 2, as you noted of course, tries to be tech agnostic, thus making it far more relevant for areas such as e-learning, for instance…

…and sorry for the current commenting issues on Accessify – there seem to be some gremlins in the works there.

bq. Relevant alt text, consistent layout, logical grouping of elements (esp form elements), even semantic code — these are all, to a greater or lesser extent, subjective. They can’t reliably be tested, but it is still vital that all authors do as much as they can to ensure that these guidelines are met.

if they’re subjective, and not testable, how can you ever hope to enforce them, say within an institution? and if the guidelines are taken as a basis for legislation, would you still want to wait for case-law to clarify each point (maybe having a jury of 10 people, or just a – non tech savvy – judge)?

i don’t think there was any need to rail on about the brains behind WCAG 2 and automated testing, as that’s not what the guidelines emphasise, quite clearly.

bq. you could say that, to understand WCAG 2, you need all the other material and techniques relating to each SC, but that is just as true for the Samurai — only that there is no techniques document for Samurai yet…

I don’t believe there ever will be techniques for the WCAG Samurai Errata (although I could be wrong) – simply because they aren’t required. The Errata provide enough strategic detail that further, background documents are unnecessary.

bq. also, Samurai only applies to HTML/CSS [whereas] WCAG 2, as you noted of course, tries to be tech agnostic, thus making it far more relevant for areas such as e-learning, for instance”¦

I think this is the only way to write accessibility guidelines – make them technology specific. Create a set of guidelines that apply to the most common technologies, then create versions that cover other technologies.

If WCAG2 removed the testability requirement, addressed the concerns around people with cognitive disabilities, and developed a set of technology-specific guidelines, with addendums for alternative technologies, then it could be a very powerful document.

bq. if they’re subjective, and not testable, how can you ever hope to enforce them, say within an institution?

WCAG1 was subjective and not testable and it was enforceable: we had a court case against the Sydney Operating Commission of the Olympic Games to prove it. But regardless – it is the Working Group’s responsibility to create guidelines that assist people with disabilities use web sites, not make sure those guidelines can be enshrined in law or that they are enforced within an institution. The W3C has said time and again that they do not write policy: they write guidelines. They don’t write laws. They don’t write rules. They write *guidelines*.

I am just beginning to scratch the surface of web standards and accessibility, and it takes real-world experience and personal discovery before I am capable of understanding why any of it is important at all. Sure, in school they wax poetic about the importance of the W3C and the WCAG in particular, but none have bothered to participate in the WCAG personally.

It sounds to me like the people pushing testability through are the ones who enjoy the political game or are encased in the impenetrable walls of academia, where time is in abundance and implementing their guidelines/lofty goals are within reach of no one else. Testability at the expense of accessibility? Perhaps this is why I get so frustrated and confused when attempting anything beyond the basics.

If WCAG 2.0 is too hard to understand, or not clear enough to be sure we can (and how we can) comply with it, I doubt that very many people on the job will be looking to the Samurai Errata for clarification, and fewer still managers (people who decide what the standards and the testing criteria will be) will use Samurai. How many web workers even KNOW about that?

Instead, I see WCAG 1.0 hanging around for a long, long while. We’re used to it. We can comply with it (when we really, really have to).

When 2.0 is doable, we’ll do 2.0.

that’s been the problem with WCAG 1, and it could become a problem if WCAG 2 was rewritten to be technology-specific rather than agnostic + good techniques documents. also, new best practices emerge all the time (even just for HTML). enshrining the current practices into guidelines (which seem to take forever to be amended and updated) may result in the same issues that actually led to WCAG + Samurai in the first place.

as for SOCOG, weren’t the things that were wrong with the site technical (missing ALT text on images and image maps, tables that didn’t linearise properly) rather than subjective?

there shouldn’t have been an underline in my above post.

The final version of the WCAG Samurai Errata aren’t out yet, and it’s disingenuous to blow up your favourite failing of the Errata into a claim that the whole thing is naff. Give us time, please.

This is a great explanation of some of the issues surrounding a “testable” in any standard, particularly in reference to usability. Interesting this exact issue is becoming an issue for the new U.S. federal voting machine standards.

I recommend anyone interested in usability standards to review the latest draft of the “standards”:http://vote.nist.gov/meeting-05212007/VVSG-Draft-05242007.pdf , known as the _Voluntary Voting System Guidelines_ (VVSG). Relevant comments should be sent to voting@nist.gov

I’ve voiced my frustration with WCAG in other venues. It’s academic and arcane and hasn’t fostered a movement towards accessible sites, leaving it still as nothing more than a hammer for lawyers or a Shining Torch of Truth for standardistas.

But I’m not sure if removing testability is the right idea. The problem is that common users _need_ testability. Most sites for businesses and academic units aren’t created by design firms or standardistas; they’re created by people with basic knowledge and basic tools. The best way to get them to accessibility is to give them tools to build and validate, and the creators of those tools need guidelines reflecting what they should be testing for.

Is the problem here the mission? Maybe WCAG 2.0 is trying to do too much here. Maybe the problem here is that the working group is trying to write Leviticus when all they need is to deliver the Ten Commandments and let others meet to come up with the 637 laws that stem from them.

In non-religious terms, maybe WCAG 2.0 needs to be just the most basic guidelines, written to be as clear and unwordy as possible, with an eye to _other_ working groups interpreting these guidelines into rules germane to specific uses. In other words, blow out 90% of the document, write some basic and general statements, and then punt it to others to try and figure out what that means for specific instances.

I’m not arguing for a market-based creation of standards. What I’m saying is that the WCAG works on not coveting their neighbor’s ass, not about whether that ass is kosher for Passover.

Unfortunately, I think it’s too late for that. We’re stuck with the muddle we’re in now, and it’s just going to continue what I consistently see at my level — the common web person blowing off accessibility because it’s not “easy” and lacks a simple validation methodology.

In my view, technology-specific guidelines are better because of the relative ease of use. Yes, they expire with technology, but what is the alternative? So far, it seems to be either 1) inordinately long development time for technology-agnostic guidelines which require technology-specific examples for clarity, or 2) updated and/or new guidelines for the natural evolution in technologies.

If there’s a third way, I haven’t seen it yet, and I’m starting to think option 2 above is the more natural choice.

The WCAG as an organisation is becoming increasingly unaccessible to the wider web community which, in itself, is an amusing paradox.

The core consideration in regards to WCAG, that prevents its relevance, is accessibility of the web to the authoring community, not just website users. Guidelines that prevent the average punter from publishing web content easily are counter-productive to the development of the internet as a whole. Which, is probably why the WCAG2.0 is never going to find endorsement by any legal authority.

The internet’s core area of accessibility is the disemmenation of content by individuals who have access to a device capable of FTP/HTTP. When guidelines are created which prevent this, then they will largely be ignored. Which, is what we have seen to date and we will continue to see in the future.

I definitely agree that the main issue at stake here is the lack of consultation with community groups who work directly with disabled people . It is from these groups that guidelines should be formed, on which, those of us with the technical skills to translate those needs into real coding approaches should then translate those guidelines into a formal working document. At the moment, I see far too many websites with a WCAG validation logo that don’t even come close to being accessible to large sections of the disabled community. Now, do you think this helps the WCAG’s credibity, or, hinder it?

bq. maybe WCAG 2.0 needs to be just the most basic guidelines, written to be as clear and unwordy as possible

Hmm. Like… this?

* 1.1 Provide text alternatives for any non-text content so that it can be changed into other forms people need such as large print, braille, speech, symbols or simpler language

* 1.2 Provide synchronized alternatives for multimedia

* 1.3 Create content that can be presented in different ways (for example spoken aloud, simpler layout, etc.) without losing information or structure

* 1.4 Make it easier for people with disabilities to see and hear content including separating foreground from background

* 2.1 Make all functionality available from a keyboard

* 2.2 Provide users with disabilities enough time to read and use content

* 2.3 Do not create content that is known to cause seizures

* 2.4 Provide ways to help users with disabilities navigate, find content and determine where they are

* 3.1 Make text content readable and understandable

* 3.2 Make Web pages appear and operate in predictable ways

* 3.3 Help users avoid and correct mistakes

* 4.1 Maximize compatibility with current and future user agents, including assistive technologies

If you don’t understand these in enough detail, there are checkpoints to spell it out. If you don’t understand _those_, there are techniques to define whether you’ve met them. And if you still don’t comprehend, the Working Group has even documented _why_ each one is necessary.

It seems most of the criticism here is that WCAG 2 isn’t at the same time universally applicable, comprehensive, precise beyond a shadow of a doubt, and capable of being taught to developers over the course of an elevator ride. I know! Maybe a unicorn could deliver it with a free iPhone, too!

I must second Matt and Andrew’s comments. Much of the criticism of WCAG 2.0 is due to a few, relatively minor rough edges. Forget the W3C process and politics for a minute and read the document from an objective point of view and you’ll find it to be quite good. It’s far from perfect, but good. Suggestions that we toss out the baby with the bath water will result in no progress in accessibility.

If we want WCAG 2.0 to be adopted or adapted in broader realms, particularly in legal arenas where it will have the broadest impact, then some level of testability is vital. Does this make things more difficult? Absolutely. Is the 8 out of 10 thing absurd? Yep. Should WCAG 2.0 include some provisions for guidelines that are not absolutely testable? Yes, or they should drop the guidelines that are pseudo-testable (what is “lower secondary reading level” anyways?).

I think many in the community simply give WCAG 2.0 way too much credence. Our goal should be accessibility, not simply compliance. WCAG 2.0 should be one of many tools we use. If the tool isn’t perfect, use it for what it’s good for and ignore the parts you think are broken. Better yet, if you think you can make it better, get submitting those comments before Friday.

I agree that testability has interfered with the ability to include some cognitive Success Criteria and that as a result those cognitive techiques were included as advisory. But I have trouble with the logic of wanting to remove testability. WCAG 2.0 has most of the common techniques for cognitive disabilities that we would find among recommendations from leading cognitive experts. The objection is that WCAG has made them advisory, not that they have not included cognitive techniques.

But if we remove testability to get the Cognitive Techniques out of advisoy then the *entire* WCAG becomes advisory. We would not have not accomplished anything that I can see.

The spectum of cognitive disabiliies is wider than any other disability group. I think there is much benefit in looking at all the guidelines for places where they impact *some* people with cognitive issues.

I agree that there are some Level 3 issues such as Acronyms that I would have liked to see at level 2 but in general there are many Guideline whose primary target may be blind people or people other disabilities which also give substantial improvement of accessibility to some people with cognitive issues also.

In looking at Level one I would say there are 9 Success Criteria that improve access for some people with cognitive disabilities. For instance, I know a lady who has a form of dyslexia that prevents her from using a mouse. For her, every Success Criteria that makes the web site keyboard accessible is a benefit to her, in fact these Success Criteria are crucial to her employment. Some people with cognitive issues may benefit from have headings which programmatically determined because they may use a User Agent which takes advantage of Heading levels. Guidelines that prevent the web site from changing focus unexpectedly help some people with cognitive issues. Guidelines that extend time outs help some people with cognitive issues who are slower to respond. Contrast and flashing related guidelines help some people with cognitive disabilities. The 4.1 guideline that makes sure all content of the site meets level one swings us back around to apply these issues to other technologies or at least provide the content in another technology which does conform.

When we look at the guidelines through the eyes of different kinds of cognitive issues we find many Success Criteria that help many different kinds of cognitive disabilities.

It’s only when WCAG tries to become law that issues such as conformance, conformance level, testability, normative vs. non-normative, advisory, etc become issues.

Let’s look at WCAG for what is it, a great collection of guidance created by a body of experts from around the globe. Let’s stop trying to make it a standard. Please let’s stop trying to harmonize the world’s law to WCAG. After all it’s the Web Content Accessibility GUIDELINES not the Web Content Accessibility Law. (or is it?)

I could never agree that we need to end testability. As a member of Industry, I need a validator that will let me know if the thousands of the pages here will conform. I can’t check each by hand. I realize that at best only part of the guidelines can be machine testable, but even having part of them is better then none when faced with such a large web site. I would rather see more testing over none!

“Patrick Lauke”:http://www.alistapart.com/comments/testability?page=2#14

bq. if they’re subjective, and not testable, how can you ever hope to enforce them, say within an institution? and if the guidelines are taken as a basis for legislation, would you still want to wait for case-law to clarify each point (maybe having a jury of 10 people, or just a — non tech savvy — judge)?

There are so many things that can’t be quantified, but can still be verified. Many points of law *do* require subjective interpretation, and there’s no problem with that.

There are plenty of things that are mechanistically testable, and that’s great. We can still have automated reports that analyse *most* aspects of accessibility, which will be very useful to developers, particularly those new to accessibility.

But we shouldn’t sacrifice good practice for the sake of having testable guidelines. There are some aspects of accessibility that are not mechanistically testable, and I think it’s OK to say that, and leave it there. The farcical attempts to define “reliably human testable” makes it messy.

The guidelines are here to serve accessibility, not the other way round!

Interesting article. I must admit I sometimes read W3C specifications with some frustration – sometimes because the language feels too academic (by trying to be too precise, and ending up being obscure), often because the specifications AREN’T testable, e.g. the CSS specifications which leave too much interpretation (personally I’d have preferred a set of tests produced as part of the specification, rather than as an incomplete after thought.

However, the WCAG guidelines are guidelines and not a specification, so I agree, aiming to make them completely testable doesn’t work. Personally, I’d like them to be expressed as a series points, each illustrated by a set of patterns and anti-patterns, each explaining why one is good from an accessibility point of view, and the other bad…

In three subsequent paragraphs under the heading ‘Cognitive disabilities neglected’, Gian Sampson-Wild implies that she was removed from the Working Group because of the way she voted.

To me, this is bullying. Vote the way we want you to, or you are out. I find this repugnant and morally reprehensible.

This is a very serious allegation and it needs to be taken seriously. If there is basis to these claims, and I’m sure Gian Sampson-Wild believes there is, then action needs to be taken. Bullying in any environment is unacceptable.

Joe,

you argue that “The final version of the WCAG Samurai Errata aren’t out yet, and it’s disingenuous to blow up your favourite failing of the Errata into a claim that the whole thing is naff”.

Yet it seems to be perfectly acceptable to attack what is not the final version of WCAG 2.0 on the basis of certain bits of it that people don’t like. That’s not just you by the way; I did it as did many others.

But to avoid having double standards it has to be reasonable to critique early versions of the WCAG errata thingummy in exactly the same way… you’ve proven you can dish it out; now you’ve got to show you’re big enough to take it.

FWIW, I think you are, and I think you’ll get it right. But that doesn’t mean you should be immune from criticism, and nor do the self-appointed and secretive Samurai deserve any more protection than do the WCAG WG…

bq. In three subsequent paragraphs under the heading “˜Cognitive disabilities neglected’, Gian Sampson-Wild implies that she was removed from the Working Group because of the way she voted.

I was a participant in the WCAG WG at the time, and I can say that nobody “removed” her from the group, period. It is possible that she was notified that she had not met the requirements for good standing (i.e., she missed 2 of the last 3 teleconferences and/or 2 of the last 3 face-to-face meetings), which would have been restored once she resumed attending the calls. What she neglects to mention is that she missed six consecutive calls (_and_ six techniques calls) between February 26 and April 22, 2004, according to the group’s minutes.

http://www.w3.org/WAI/GL/minutes-archive.html

And yet, even she admits that those in attendance were opposed — _unanimously_ — to her proposal, and reiterated that opposition the following week.

Occam’s razor, people. Her standing was changed because she was not actively participating. No conspiracy necessary.

Now, Jack, our esteemed colleague Patrick H. Lauke did what I said he did. My previous article on WCAG 2 was a somewhat different animal. Care to compare and contrast?

* Your one-liner list of WCAG 2 headlines is great as far as it goes, but in fact people _do_ need accessibility guidelines to be, at once, instantly gleanable, fully detailed, implementable without indecision, and actually likely to improve accessibility for people with disabilities. That may be an impossible dream, which would be a fair point of criticism. But maybe — just maybe — WCAG 2 as it stands is _too far away_ from these requirements.

* Gian has stated publicly that she was very sick during the time she missed the phone calls. At least one WCAG WG member knows this firsthand. Illness is a disability, and the WCAG Working Group did not accommodate that disability short of undue hardship. The issue passed a vote “unanimously”? only after W3C technicalities were deliberately used to remove Gian from the group.

Matt, you don’t work for the W3C anymore. It is no longer necessary to engage in Stockholm-syndrome-like defences of the organization. WCAG WG busts people who disagree or who threaten to withhold “consensus.”? That’s how they operate; that’s why they’re bullies; that’s why so very few of us trust them anymore.

my right honourable friend joe may have misinterpreted my reason for the apparent statements of “naffness” of WCAG + Samurai.

for the record, i do like the Samurai document a lot, barring a few minor nitpicks.

what i was referring to in my comments ( “such as number 8”:http://www.alistapart.com/comments/testability?page=1#8 ) was not meant to take away from it, but rather to ask Gian for clarification on why she felt it was a better solution to her fundamental problem with WCAG 2, leading to one of her final statements:

bq. With the publication of the WCAG Samurai Errata, the web community finally has a choice …

which seemed like a non-sequitur to me, since Samurai has the same fundamental problem for cognitive disabilities in particular.

First off, consensus _is not_ unanimity. If one participant stands apart from the rest on a point like this, they can raise a formal objection, which Gian has, and which will result in the group having to defend their decision. (Again.) Still, good standing would never have afforded her the privilege of lying down in the road to get her way.

In this case, the argument seems to be that the proposal she made, which didn’t receive _any_ support from a group made up of a pretty even mix of advocates, academics and technical folks, should be overturned because… why? Because some bureaucratic status that is about as relevant to the situation as her eye color was changed around the same time? Because it somehow got into ALA? And now, because she was sick? (The messages she sent to the list to excuse herself at the time usually indicated she was “travelling.”) No matter how many times you try to explain away how it didn’t go the way you wanted, it’s still a bad idea.

With regard to the perceived trustworthiness of W3C, and WAI in particular, well, take a bow. You’re the one who’s responsible for that, thanks in large part to your vividly creative storytelling. But that’s worth an article unto itself.

bq. …nobody “removed”? her from the group, period…

I said: “my status as a member (in good standing) was revoked due to ‘non-participation.'”

There were other members in the Working Group that did not fulfil the requirements of attendance. And, as Matt points out, I had not been meeting my attendance requirements for some time. However it was only when I stopped consensus on testability that my status was revoked. It was not “some bureaucratic status that is about as relevant to the situation as [my] eye color” – it was the reason why I could not vote against testability. And this “bureacratic status” wasn’t “changed around the same time” as my vote against testability – it was changed *within 24 hours* of my vote against testability.

To clarify I was actually removed from the Working Group at a latter date (which Joe Clark refers to). In the above instance I was living in rural Queensland and travelling to various capital cities for work. Being on the Working Group may not seem too onerous – but it means reviewing up to 100 emails a day, and, in Australia it means weekly 6am teleconferences on a Friday – as a volunteer. Because I was running my own business for most of that time, I was the one who had to foot the bill for the 90 minute international telephone calls (long before Skype became available). I did miss some teleconferences. However it was only when I voted against testability was my status (and voting rights) revoked. I was so disgusted by this that I remained inactive within the Working Group.

Eight months later all Working Group Members were required to re-apply due to the Patent Policy. I used that opportunity to become active within the Working Group. It took me six months of emails to the W3C Staff Member before I was allowed back on the Working Group – and only after I had sent an email to the entire Working Group and CCed the WAI Steering Committee.

I became very ill in October 2005. I did not work for ten months. During that time I did not participate in Working Group teleconferences or via the mailing list. However it was only after I lodged a record number of comments on the Last Call Working Draft and *after* I had mentioned to an IBM Working Group member that I would be joining the next teleconference did I get told that I was no longer a Member. On the 20 July 2006 I received an email from Judy Brewer saying that my Invited Expert status was about to expire “since you haven’t been an active participant in the WCAG WG recently”. I emailed back that I had been ill. The reason for my removal changed to my apparent lapsed association with my affiliate company. When I argued that my association had not lapsed, the emails ceased.

I have posted the stream of emails between Judy Brewer and “myself”:http://www.tkh.com.au/?p=39

I have also included comments about my removal from the Working Group by various non-corporate members of the “Working Group”:http://www.tkh.com.au/?p=39#wg

Matt May knows that I back up my assertions about WCAG WG with original documents or links to them. This, in the industry, is called documentation, not “vividly creative storytelling,”? a cutesy phrase I assume means “lying.”? If anyone can prove I have lied about any topic in the last 30 years, please publish your documentation.

The original article (remember that?) was a very good piece about testability.

The last bunch of comments aren’t, and should be taken to personal blogs. Why not comment on Gian’s blog entry that includes the discussions with the working group. They’d be on-topic there.

The notion that the best set of guidelines would be entirely testable seems ludicrous to me but so does the idea that any testability whatsoever is unwarranted. I mean, we _are_ doing this on computers, after all – you’d be leaving entire orchards of fruit on the vine by refraining from having any testable guidelines whatsoever.

For example, “Make all functionality available from a keyboard” is eminently testable, as are many specific cases of the other guidelines, for example, in compliance with “Help users avoid and correct mistakes” no form control should start off in a state that it cannot be returned to – e.g. the infamous set of radio buttons that start with nothing selected, but then once you click one you can’t back out even if it wasn’t a required field… and although the _quality_ of particular alt text can’t be judged by a machine its _presence_ – whether the alt tag is missing or empty, or even whether it contains an entire sentence – _can_ be machine-tested. You could even go so far as to test whether all photographs have alt tags, whether images containing text have alt tags, whether images containing faces have alt tags, et cetera.

And in the spirit of guiding rather than strict lawgiving, tests need not be for the strict purpose of passing or failing on compliance, they can be informative. I must concur with the previous poster that a technological guideline, an actual test tool or suite of tools, may be appropriate for these parts of the standards.

I do think that the testable and non-testable guidelines should be clearly delineated. I also think that the cost of testability should be considered and ameliorated, particularly when publicly-available tools could be developed, or the existing ones better-used. (But I would note that despite its title, neither the article nor the subsequent discussion has addressed cost.)

I’m sorry, Bruce, but I disagree with you. Discussion of Gian Sampson-Wild’s claims in regards to her removal from the Working Group are valid.

How many on that Working Group are voting in a specific way because that is the way that they have been told/shown is the ‘correct’ way? It could be that others agreed with Gian on testability but were too afraid to vote in the way that they truly felt.

Is there bullying in other Working Groups in the W3C? Is the W3C creating documents to the best of all combined abilities?

These questions need to be asked considering that at least one other person has made similar claims of this Working Group and there seems to be no response from the W3C about it; tacitly condoning the alleged type of behaviour.

I’ve written up “my thoughts in more detail”:http://www.thepickards.co.uk/index.php/200707/wcag-20-testability-testing-times-tetchiness/ but taking into account Bruce Lawson’s quite sensible pointing out that we’re discussing testability rather than Working Group status or otherwise, I’ll not go into detail here.

You can either nip over to my site to read my long and rambling opinionated rant, or not. I honestly don’t mind.

For ALT text, the test is surely a self-critical question to the designer: “Does the alt-text I’ve used give the reader an idea of what the image is trying to convey, or what my purpose in including it in the web page was, if for some reason the reader can’t see the it?”

For any image, there will be a range of acceptable alt-text, so the answer to the question will only be a matter of opinion, informed or not. That’s not something that’s testable by machine, is barely testable by humans, and certainly not with any degree of consistency (at the required 80% level for WCAG2).

If its not testable, you can’t measure compliance and hence the perceived reduction in usefulness of WCAG2 to purchasers of websites as a standard to aim for. Sounds like sticking strictly to the letter of WCAG2 could result in less usable/accessible (sensu lato) websites. I’d rather stick to the spirit and use techniques shown to enhance accesibility/usability. Actual demonstration of that to a user/client would be better than certification.

We do test our product though accessibility testing is often like many companies seen as important. If there are problems found by customers the issues are fixed pretty swiftly.

As a designer I have never got into WCAG2 as it seemed to less used and to vague. Maybe I just haven’t got the time to learn another set of standards as WCAG1 was heavy enough.

Testability is unfortunately a must for the many many people, including the W3C and its members, who make money through accessibility consultancy – it allows them to say “This is exactly right, and this is exactly wrong”, which helps a lot and leaves no uncertainties or arguments or incorrect online testing systems.

However, Gian is exactly right – many of the most important elements of the current set of the WCAG are untestable – these elements also go hand in hand with search engine optimisation and usability/general user experience for even able-bodied users.

The document defines testable as “machine-testable” or “reliably human testable”? — which means that eight out of ten human testers must agree on whether the site passes or fails each success criterion.”

The truth is that disabled users are not machines and 8 out of ten human beings aren’t disabled. In reality, the W3C should get these guidelines approved not by a group of able-bodied front-end developers who are going to have to use and adhere to them, but by the very people they are intended to help the most: disabled users. And with the multitude of disabilities out there, it’s about time we started asking all of them what they think and what they would want from websites.

I cannot agree strongly enough with Aidan Williams – we need to start testing with, and talking to, people with disabilities.

This group of people is unfortunately under-represented in the WCAG Working Group. Often when we worked in Working Group sub-groups to revise specific success criterion we were split by geographic location, not by interest or knowledge of a particular area of disability. But this is not a problem relevant only to the Working Group: I believe the entire accessibility community needs to engage with their users more.